Welcome to Our Blog

Train a Perceptron to Learn the AND Gate from Scratch in Python

What will you Learn in this Post? Neural Networks are function approximators. For example, in a supervised learning setting, given

Linear Regression from Scratch using Numpy

Hello friends and welcome to MLDawn! So what sometimes concerns me is the fact that magnificent packages such as PyTorch

Birth of Error Functions in Artificial Neural Networks

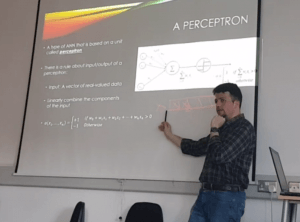

This talk was delivered in PyData meetup. This was an amazing meetup with great talks and ideas being discussed!

Binary Classification from Scratch using Numpy

Hello friends and welcome to MLDawn! So what sometimes concerns me is the fact that magnificent packages such as PyTorch

The Decision Tree Algorithm: Fighting Over-Fitting Issue – Part(2): Reduced Error Pruning

What is this post about? In the previous post, we learned all about what over-fitting is, and why over-fitting to

The Decision Tree Algorithm: Fighting Over-Fitting Issue – Part(1): What is over-fitting?

What is this post about, and what is next? Hi there!!! Let’s cut to the chase, shall we?! You have

The Decision Tree Algorithm: Information Gain

Which attribute to choose? (Part-2) Today we are going to touch on something quite exciting. In our previous post we

The Decision Tree Algorithm: Entropy

Which Attribute to Choose? (Part1)

|

1 2 3 4 5 |

In our last post,&nbsp; we introduced the idea of the decision trees (DTs) and you understood the big picture. Now it is time to get into some of the details. For example, how does a DT choose the best attribute from the dataset? There must be a way for the DT to compare the worth of each attribute and figure out which attribute can help us get to more pure sub-tables (i.e., more certainty). There is indeed a famous quantitative measure for this called Information Gain. But in order to understand it, we have to first learn about the concept of Entropy. As a reminder here is our training set:</p> <img width="1024" height="580" src="https://www.mldawn.com/wp-content/uploads/2019/02/which-attribute-to-choose-1024x580.png" alt="" srcset="https://www.mldawn.com/wp-content/uploads/2019/02/which-attribute-to-choose-1024x580.png 1024w, https://www.mldawn.com/wp-content/uploads/2019/02/which-attribute-to-choose-300x170.png 300w, https://www.mldawn.com/wp-content/uploads/2019/02/which-attribute-to-choose-768x435.png 768w, https://www.mldawn.com/wp-content/uploads/2019/02/which-attribute-to-choose.png 1092w" sizes="(max-width: 1024px) 100vw, 1024px" /> <h2>What is Entropy?</h2> Entropy of a set of examples, can tell us how pure that set is! For example, if we have 2 sets of fruits: 1) 5 apples 5 oranges, and 2) 9 apples and 1 orange, we say that set 2 is much more pure (i.e., has much less entropy) than set 1 as it almost purely consists of apples. However, set 1 is a half-half situation and is so impure (i.e., has much more entropy) as neither apples nor oranges can dominate! Now, back to the adults' world and enough with fruits :-) |

In a binary classification problem, such as the dataset above, we have 2 sets

The Decision Tree Algorithm: A Gentle Introduction

What will you learn? You will learn how a decision tree works but without going to the details. For example,