What is Reproducibility All About?

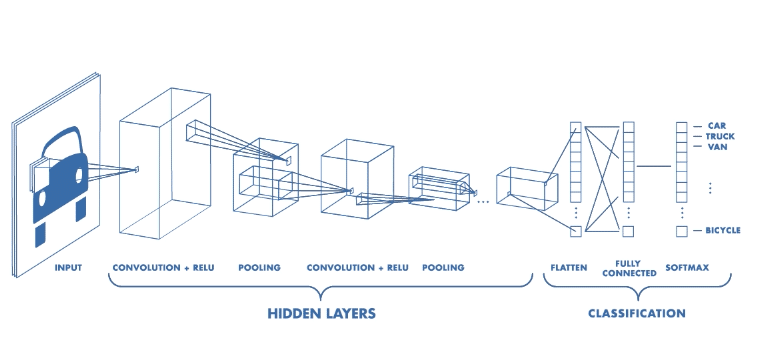

As a computer scientist, or as an academician, you do experiments with a bunch of algorithms. Let’s say you have coded a machine learning algorithm, like an Artificial Neural Network, and after doing your experiments with different datasets, you have found out that the best type of neural network has the following structure, in terms of the architecture:

So, you know that the best performance happens with this exact architecture and you have proof of that, as you have been a good programmer and saved all your results. The problem arises when you decide to reproduce the same results on your same very machine . You choose the same architecture, with the same number of convolutional layers, the same kernel size for your convolutional operations, the same pooling in your pooling layers, but your algorithm performs differently! If you are so unlucky, the results could be quite poor! This is what happened to me and made me struggle with it for 3 straight weeks, until I found a solid solution, which I am going to share with you 😉

This is when you say, an experiment is NOT reproducible!

In this post, I will address one such notorious problem when coding with PyTorch and Python. Let’s just say that I struggled with my script for over 3 weeks and I learned about some very nasty tricks! I will share them all with you, so you will not experience the pain ;-).

The Guidelines for Reproducibility in PyTorch

-

Seed Every Random Number Generator anywhere in your code. The hiding places for random number generators in Python scripts are:

-

Numpy’s random number generator: If you are using anywhere in your code, any type of sampling, or random number generation methods of Numpy, you will need to seed Numpy’s random number generator.

-

Python’s random number generator: Python has functionalities such as shuffling a list/array, and if in your code there is any such functionalities of Python, you will need to make sure that you have seeded Python’s random number generator.

-

The behavior of your environment variable: The way the processes/threads are generated in your environment, is subject to randomness and you need to seed it.

-

|

1 2 3 4 5 6 7 8 9 10 11 12 |

# Import the packages that need being controlled for reproducibility import torch import os import numpy as np import random as r # Seed the behavior of the environment variable os.environ['PYTHONHASHSEED'] = str(1) # Seed numpy's instance in case you are using numpy's random number generator, shuffling operations, ... np.random.seed(1) # Seed Python's random number generator, in case you are using Python's random number generator, shuffling operations, ... r.seed(1) |

-

Now, PyTorch has its own devilment and you need to pay attention now, as this is the real part that you are probably struggling with.

-

The general PyTorch source of randomness: Seeding it controls the general randomness in PyTorch. Especially operations related to the CPU operations!

-

The specific Cuda seeding: This has 2 steps, depending whether the code is running on only 1 or multiple GPUs. This has nothing to do with CPU operations!

-

The Cudnn’s behavior: This needs to be deterministic and this is done in 2 steps as well

-

|

1 2 3 4 5 6 7 8 9 10 |

# In general seed PyTorch operations torch.manual_seed(0) # If you are using CUDA on 1 GPU, seed it torch.cuda.manual_seed(0) # If you are using CUDA on more than 1 GPU, seed them all torch.cuda.manual_seed_all(0) # Disable the inbuilt cudnn auto-tuner that finds the best algorithm to use for your hardware. torch.backends.cudnn.benchmark = False # Certain operations in Cudnn are not deterministic, and this line will force them to behave! torch.backends.cudnn.deterministic = True |

-

CRAZYYYY Issues with the Dataloader: On a multi-processing platform such as a server (Whether you work with GPUs or CPUs), the dataloader can behave in a very strange manner.

-

The craziest thing ever is the behavior of the dataloader when one runs their code on a Server where we have a multi-processing, strong platform. This tricky case, that I am going to talk to you about, does not happen on your local laptop or desktop but only on a server, regardless of whether we are using GPUs or CPUs, and this is what makes this case of reproducibility indeed a nasty one! The problem is that if you are defining a batch size for your dataloader, chances are that the last batch of your data might not be fully filled with data! For instance, if your batch size is 32, then the last batch might have less number of data, say 10! The dataloader, only on a multi-processing platform like a server, fills the remaining space with some random junk! I have no idea why! But this will make your code NOT reproducible, as there is no way that I know of that you could seed this particular source of randomness! Now, as a solution, you could switch to a FULL BATCH learning method, or if you insist on a mini-batch learning method, then you could ask the dataloader to LITERALLY drop the last batch, with turning the drop_last flag to True!!

-

|

1 2 3 4 5 6 7 8 9 |

# You can either use full batch mode, meaning that set the batch_size variable in your dataloader to the size of your ENTIRE training set if full_batch == True: batch_size = train_data.shape[0] dataloader = DataLoader(dataset, batch_size=batch_size, shuffle=False) # If full-batch is not an option, then you need to set the boolean flag drop_last to True! Otherwise, PyTorch # will fill the empty places in your last batch (if there is any), with random values (This crazy phenomenon happened # to me on 2 different servers! One with GPU support, and the other with JUST CPU support) Isn't this ridiculous???!!! else: dataloader = DataLoader(dataset, batch_size=batch_size, shuffle=False, drop_last=True) |

-

Some more rare Issues with the Dataloader: In some rare cases, the Dataloader itself needs to be seeded! (And No! Seeding it, did not fix the last previous problem with the last batch) You see, the dataloader has this idea of the “workers” behind the scene, that handle the entire shuffling operation in your dataloader. So, you MIGHT have to seed these workers as well! The way to seed them is a little strange! The dataloader module has a variable called “worker_init_fn” and it needs to be fed with a function (yeah! I know! Weird!) and in that function you would have to seed these workers. Here is how you do it:

|

1 2 3 4 5 6 7 |

# This is the function that considers a worker's ID and adds a value, say 12, to it # And use that value for seeding the randomness wor that particular dataloader worker. # This will be used by each worker in the dataloader def _init_fn(worker_id): np.random.seed(12 + worker_id) # And this is how you can pass this function to the dataloader dataloader = DataLoader(dataset, batch_size=batch_size, shuffle=True, worker_init_fn=_init_fn) |

-

Seeding dependent scripts everywhere: Make sure, IF your main function is calling other functions and scripts that you have coded in a separate file, that you have seeded EVERYTHING in those scripts and files as well.

-

Seeding any algorithm in any packages that you are using: Make sure if you are using any packages, that you have seeded any source of randomness in them. For example, if you are using Scikit-learn’s K-means clustering algorithm, you MUST know that this package initializes the cluster centers randomly! So, you need to seed that randomness for the sake of reproducibility. As shown below:

|

1 2 3 4 5 |

# Importing KMeans from Scikit-learn from sklearn.cluster import KMeans # Creating a KMeans object, with 10 cluster centers, seeded with random_state = 0 # And then we have passed the train_data so that it would learn proper clusters. kmeans = KMeans(n_clusters=10 , random_state=0).fit(train_data) |

It is a wonderful post!

The same goes for TensorFlow. According to the documentation in TensorFlow, operations that use a random seed are dependent on seeds at the global and operational levels.

Here is the link, for more details to set seed in if you used TensorFlow:

https://www.tensorflow.org/api_docs/python/tf/random/set_seed

Perfect!